Appearance

Beyond Individual Bots: How Bio-Inspired AI and Swarm Intelligence Are Revolutionizing Adaptive Robotic Systems

Have you ever watched a colony of ants efficiently build complex structures or a flock of birds move in perfect synchronicity? These natural phenomena are not governed by a central leader but by simple local interactions between individuals. This concept is at the heart of swarm robotics, a field that combines the power of bio-inspired Artificial Intelligence (AI) with the collective strength of many simple robots to create incredibly adaptive and robust systems.

Instead of building one large, complex, and expensive robot, imagine deploying hundreds or even thousands of small, simple, and inexpensive robots. These "bots" don't need a master controller. Their collective behavior, or "swarm intelligence," emerges from their local interactions, much like how a brain's complex functions emerge from simple neuron connections.

The Core Principles: Simplicity, Decentralization, and Emergence

Swarm robotics thrives on a few key principles:

- Decentralized Control: No single robot or external system dictates the actions of the entire swarm.

- Self-Organization: The swarm can adapt and reconfigure itself dynamically to changing conditions or new tasks without human intervention.

- Local Communication: Robots primarily interact with their immediate neighbors, exchanging limited information. This keeps the system scalable and robust.

- Redundancy and Robustness: If a few robots fail, the swarm can still accomplish its mission because the task is distributed among many.

This approach mimics nature's proven strategies for resilience and efficiency.

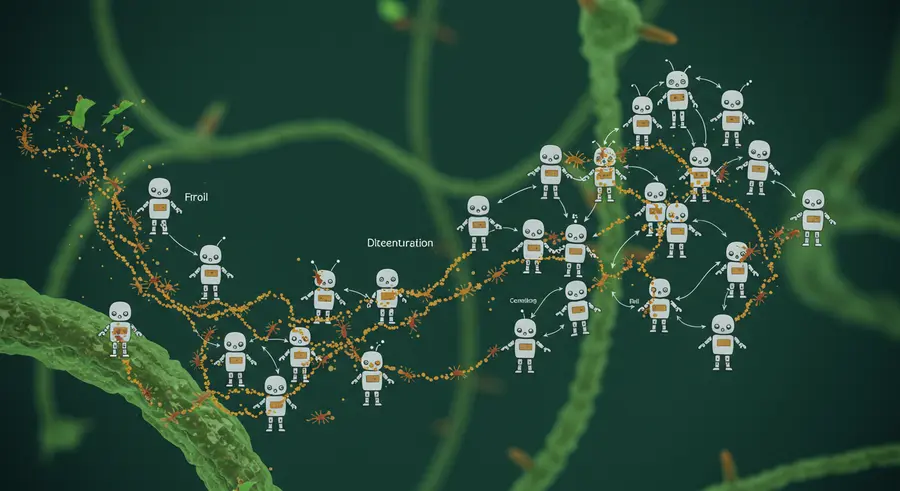

Here's a visual representation of how simple, local interactions can lead to complex collective behavior:

Where We're Seeing the Swarm in Action

The potential applications of bio-inspired AI and swarm robotics are vast and exciting:

- Disaster Response: Swarms can explore dangerous, unstable environments (like collapsed buildings or contaminated zones) to search for survivors and map areas without risking human lives.

- Agriculture: Tiny agricultural robots can monitor soil conditions, precisely apply fertilizers, or remove weeds, leading to more efficient and sustainable farming.

- Space Exploration: Imagine a fleet of robotic explorers working together to map distant planets, repair spacecraft, or even build structures in extraterrestrial environments.

- Logistics and Warehousing: Companies like Amazon already use autonomous robots to sort and transport packages, significantly improving efficiency and reducing operational costs.

- Environmental Monitoring: Swarms of sensors can monitor large areas for pollution, climate changes, or deforestation, providing valuable data that would be impossible to collect with single units.

The Design Challenge: Bridging the "Reality Gap"

While the promise is huge, designing these systems isn't without its challenges. One major hurdle is the "reality gap." We often design and test robot behaviors in simulations, but real-world environments are far more complex and unpredictable. What works perfectly in a digital simulation might fail in reality due to subtle differences in physics, sensor noise, or unexpected interactions.

Traditional methods often involve manual programming or "offline" automatic design where control software is developed and optimized in a simulation before deployment. However, researchers are now focusing on methods that are inherently robust to this gap, or even "online" design processes where robots learn and adapt while performing their mission in the real world.

For instance, robot evolution and robot learning are key areas:

- Evolutionary Swarm Robotics: Here, control software for robots is generated through artificial evolutionary processes. Think of it like natural selection for code: poorly performing behaviors are discarded, and successful ones are refined over generations. Neuro-evolution (using neural networks) and automatic modular design (assembling behaviors from pre-defined modules) are common techniques.

- Multi-Agent Reinforcement Learning: Robots learn optimal actions by trial and error, based on rewards from their environment. The challenge is often how to define rewards for individual robots that contribute to the overall collective goal.

- Imitation Learning: Instead of defining rewards, robots learn by observing and imitating demonstrated behaviors. This is particularly promising for complex tasks where explicitly programming rules is difficult.

The goal is to develop adaptive systems that can "unravel the emergent" behavior necessary for complex tasks, rather than having every single action pre-programmed. This aligns with the principle of "The most robust solutions don't command, they emerge."

Looking Ahead

The field of bio-inspired AI and swarm robotics is rapidly advancing. We are moving towards a future where intelligent, collaborative robot swarms will be an integral part of solving some of humanity's most pressing challenges. From large-scale infrastructure maintenance to personalized healthcare, the collective intelligence of these green machines will "optimize the collective" and "decentralize the insight," leading to more resilient, scalable, and harmonious technological futures. The possibilities are truly swarming!